I’ve been putting together a very rough and ready comparison of the price and listed functionality of Redhat’s new RHEV-H virtualization platform, based on KVM with a small footprint version of Redhat’s enterprise Linux system, all wrapped up with a Windows-based management client.

I say “listed functionality” because Red Hat are the only x86 virtualization platform developers that I can think of that don’t even let you quickly download a version of their software, slightly ironic given that they’re an open-source developer and their competitors VMware, Microsoft and Citrix are all historically closed-source companies, though Citrix have open-sourced their base XenServer virtualization system.

Assuming I can get a trial version of RHEV-H and it’s management client, I’ll write a new post giving you my experiences with it in comparison to VMware vSphere.

On paper, RHEV-H is a pretty functional product, supporting:

• High availability – failover between physical servers

• Live migration – online movement of VM’s between physical hosts without interruption

• System scheduler – dynamic live migration between physical hosts based on physical resource availability

• Maintenance manager

• Image management

• Monitoring and reporting

These are the major components of a virtualization platform, indeed live migration and the system scheduler are high-end features on the other virtualization platforms, so for Red Hat to include in it’s “one-size-fits-all” package is a nice addition.

The major player in the virtualization arena is without a doubt VMware, and their vSphere Advanced product will deliver the functionality that pretty much any company would want, though the have an “Enterprise Plus” option which adds even more for larger corporations.

VMware vSphere Advanced includes:

- VMware ESXi or VMware ESX (deployment-time choice)

- VMware vStorage APIs / VMware Consolidated Backup (VCB)

- VMware Update Manager

- VMware High Availability (HA)

- VMware vStorage Thin Provisioning

- VMware VMotion™

- VMware Hot Add

- VMware Fault Tolerance

- VMware Data Recovery

- VMware vShield Zones

A lot of that functionality, especially the Fault Tolerance, vShield Zones and vStorage APIs simply aren’t matched in any other virtualisation platform right now, whatever the price. However, the vSphere Standard product misses out the VMotion and Fault Tolerance functionality along with thin-provisioning and data recovery features, which means that while it’s still an excellent product, it does mean more management overhead in the event of needing to arrange physical server downtime, etc.

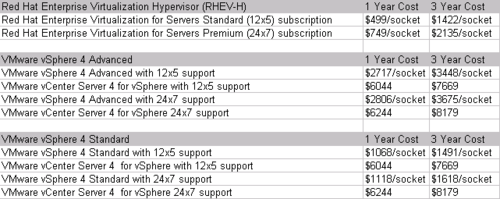

Now to the prices, I’ve put together the list prices of RHEV-H and VMware vSphere Standard and Advanced, and put them below in a table and also a sample configuration based on 1 management server and 5 physical hosts, each with 2 sockets.

Because Tumblr doesn’t seem to let you embed a table, I’ve had to put the table as an image, sorry about that.

As you can see, RHEV-H is the cheapest software option of the 3, though the 3 year cost-benefit compared to vSphere Standard aren’t huge, especially when 24×7 support is included. vSphere Advanced costs significantly more, but delivers a lot more too, though it could be more than your own company needs.

Below are the full costs I’ve used to calculate the above results, please let me know if you think I’ve got anything wrong or missed anything out.

The prices above were taken from the VMware online store on 29th December 2009, and the Red Hat Virtualization Cost PDF, again on the 29th December 2009.

Overall, it looks like the pricing of Red Hat’s RHEV-H system makes it worth the effort of aquiring it and giving it a solid shakedown, but it’s not going to force VMware into radically changing their own pricing structure.

vSphere Advanced is streets ahead in terms of functionality, and the wide-spread adoption of VMware products in general means vSphere Standard may lack some of the functionality of RHEV-H but makes up for it in other areas, especially around the management and backup+restore side of virtualisation, where RHEV-H has a long way to go to catch up.